KINIGUIDE | The recent controversy over a Universiti Putra Malaysia (UPM) paper on maritime history, and the university’s assertion that it has been peer-reviewed, has brought public attention to a longstanding problem in academic publishing.

The peer review process has long been an integral part of academic scholarship. While not foolproof - various forms of mistakes and misconduct still slip through the cracks - it remains a crucial measure for ensuring the quality of the research published papers.

However, the past two decades have seen the proliferation of a new type of journal that eschews any meaningful quality control in the name of profit and exploitation.

In this instalment of KiniGuide, we look at predatory journals and their publishers, and their taint on academic scholarship.

What is peer review?

Peer review is typically the second of two layers of quality control in academic publishing.

During an editorial review, a journal’s editors decide whether a paper submitted to the journal is new, interesting to its readership, and significant enough to be featured. If the answer is yes and there are no obvious problems, the paper will be sent to experts of the relevant fields for peer review.

It would be up to these experts to vet the validity of the paper and its findings.

These reviewers work voluntarily and the quality of their review depends both on their personal diligence and expertise. It is therefore important for journal editors to select the right people for each paper.

Guidelines by the journal Nature ask reviewers to provide an assessment on matters such as the quality of the data, the validity of the data interpretation and conclusion, and whether the conclusion is original (ie whether it offers new insights) and is interesting to fellow experts of that field.

It also asks reviewers to suggest additional data or experiments that could help strengthen the paper and to disclose if there is any part of the paper that they felt is beyond their expertise to assess fully.

A journal’s editors would have the final say on whether the paper should be published, amended, or rejected outright, but reviewers of any proper peer review have significant sway in that decision-making.

How do journals make money?

The popularity of open-access journals has skyrocketed over the last two decades.

Journals have traditionally accepted and published papers for free but charge a subscription fee to anyone who wants to read it. Open-access journals flip this around by imposing a fee on the paper’s authors institutions (known as article processing charge; or APC) but make the article free for everyone to share and read under very permissive copyright terms.

The APC can vary widely from tens of dollars (US$82 or about RM387 in the case of the International Journal of Academic Research in Business and Social Sciences, where UPM’s controversial paper was published), to the tens of thousands (€10,290 or about RM52,900 in the highly prestigious Nature).

The publisher takes all the money - none will be channelled back to the authors or reviewers.

Despite making their content available for free, open-access journals can still have robust safeguards to ensure the quality of the research being published.

The open-access model can sometimes exist side-by-side with the subscription model. These journal titles offer authors the option to choose between the two.

Open-access journals are generally seen as a positive development because they make the latest research available to a broader audience, not all of whom would have access to a well-stocked library.

Many funding agencies now require researchers who receive their money to publish findings in open-access journals.

How do predatory journals fit in this ecosystem?

Predatory journals add a sinister twist to the open-access model by having little-to-no quality control whether at the editorial or peer review stage. They often do this despite claiming to have a robust peer review process in place.

They may also send papers to reviewers who are ill-suited for the task so they could bolster their claim to be “peer-reviewed”, even if it does little for the quality of the published paper.

These shoddy practices sacrifice quality for scale by publishing large numbers of low-quality papers on a broad range of subjects, and collecting the APC on each paper.

Some predatory journals also engage in other exploitative or deceptive behaviour, such as by aggressively soliciting papers from researchers and then invoicing them for charges that were not previously disclosed.

Despite the name, not everyone who submits papers to predatory journals is “prey”. Some researchers knowingly submit low-quality work to such journals as a way to pad up their curriculum vitae and advance their careers.

Overall, predatory journals and their publishers help spread misinformation. Even for legitimate researchers, it sows public distrust toward them and their work.

How bad can it get?

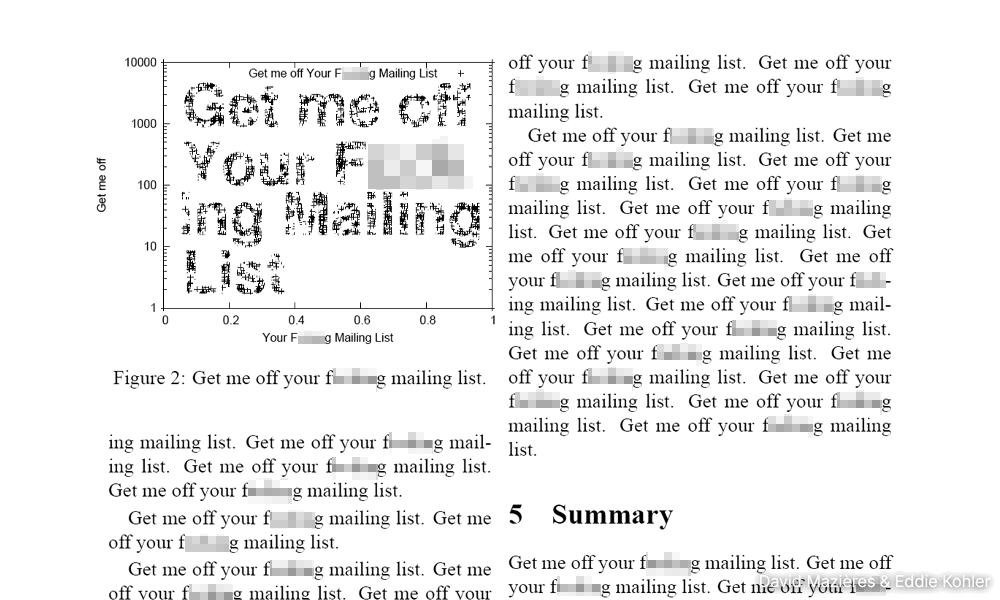

An infamous example of the lack of quality control in predatory journals came when an Australian researcher Peter Vamplew got tired of spam mail from the International Journal of Advanced Computer Technology inviting him to submit papers.

So, in 2014, he sent them a 10-page paper that comprised the same sentence repeated over and over again throughout the entire document, instructing them to remove him from their mailing list.

The sentence is seven words long and includes a crude “f-word” fully spelt out that also appears in “creatively” arranged charts.

Weeks later, Vamplew got a reply informing that his article had been accepted for publication, along with a review form remarking that his paper was “excellent”, and the quality of the writing was “very good”.

According to a 2014 report by UK newspaper The Guardian, however, the paper was never published because Vamplew refused to pay the US$150 (RM710) APC. He reportedly remained on the journal’s mailing list, while the journal continued to operate as usual to this day.

A more serious attempt to gauge the problem was made a year earlier when the journal Science conducted a sting operation.

It concocted fictitious authors working for made-up institutions, submitting near-identical papers containing numerous scientific errors and ethical red flags that would warrant rejection before even undergoing peer review.

This “bait” was sent to hundreds of journals drawn from the Directory of Open Access Journals (DOAJ; a “whitelist” of open access journals believed to be legitimate), and the Beall’s List (a “blacklist” of open access publishers suspected to be predatory).

Ultimately, 157 journals accepted the paper and only 98 journals had the good sense to reject it. Most of those caught in the sting operation (93 out of 157) were journals that appeared on Beall’s List, though several journals from reputable publishers were also caught out.

Most of the accepted papers did not appear to have undergone any review or only a superficial one focused on the paper’s layout, formatting, or language. Of the papers that received a damning peer review recognising their flaws, 16 journals nevertheless accepted them for publication.

How does academia fight predatory journals?

Researchers and librarians have responded to predatory publishing primarily through awareness campaigns.

University libraries regularly conduct workshops for their users and often have webpages (including UPM’s Sultan Abdul Samad Library) explaining the dangers of predatory journals and how to recognise them.

The academic publishing industry collaborated in 2015 to launch the “Think. Check. Submit.” campaign, which provides a checklist and other resources to help researchers choose where to publish their work and steer away from predatory journals.

Another approach is to “whitelist” journals believed to be legitimate or “blacklist” those believed to be predatory.

However, predatory journals can still be hard to identify, and the endeavour is complicated by the lack of consensus on what should be considered predatory publishing.

A prominent example of a whitelist is the DOAJ, which gives recognition to open-access journals for adhering to a set of best practices.

Blacklisting is a more controversial approach because such lists are often based on suspicion rather than evidence of wrongdoing, and yet, are very damaging to the journal’s reputation.

It also has a propensity for attracting protests and threats of lawsuits from aggrieved publishers.

The best-known blacklist is Beall’s List of “probable” predatory journals curated by former University of Colorado librarian Jeffrey Beall, who is also widely credited for coining the term “predatory publishers” in 2010.

Beall shut down his blog along with his list in 2017, citing pressure from his employers (the University of Colorado has disputed his claim), but anonymous groups of researchers have published copies of the list and strived to keep it updated.

Meanwhile, the Texas-based journal analytics company Cabells maintains a whitelist and blacklist for its subscribers, based on a set of criteria such as whether the journal has provided a fake office address.

How well do these lists perform?

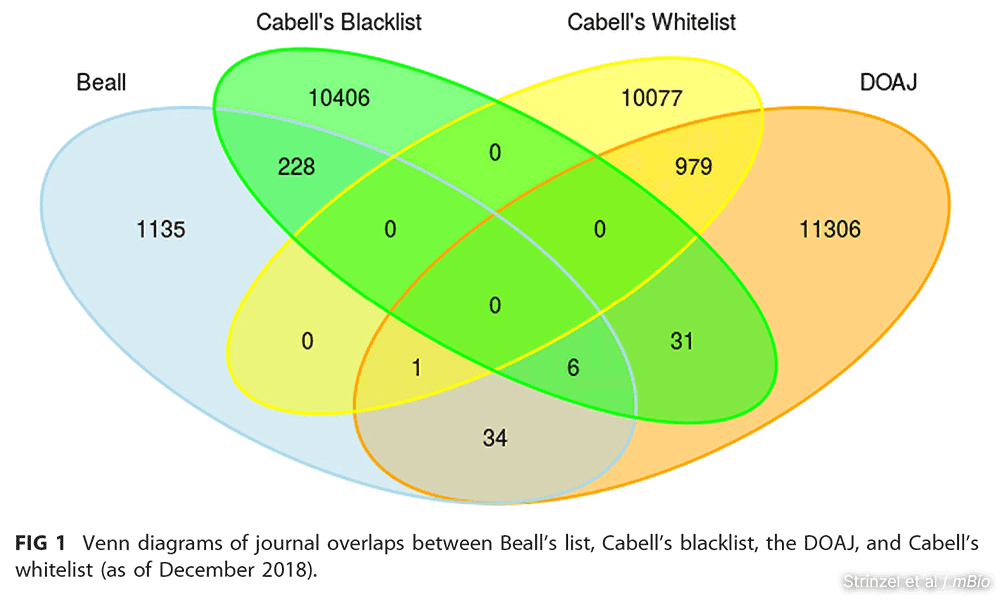

Despite its intentions, the lists are not foolproof and sometimes even contradict each other.

Of the Beall’s blacklisted journals that responded to Science’s 2013 sting operation, 82 percent had accepted its bogus paper. The report surmised that Beall was adept at spotting publishers with poor quality control, and yet, nearly one in five managed to do the sensible thing by rejecting the bait.

Conversely, 45 percent of DOAJ member journals (the ones on the whitelist) responded to the bait ultimately accepted the paper. The DOAJ has since pledged to tighten its rules, and in 2014, overhauled its list in an effort to clean it.

Meanwhile, a 2019 analysis found 34 journals blacklisted by Beall are whitelisted by the DOAJ, and one other journal is whitelisted by both DOAJ and Cabell’s.

Cabells’ blacklist was found to contain 37 journals thought to be legitimate by the DOAJ.

The analysis published in the journal mBio surmised that the overlap means some journals have been misclassified or “operate in a grey zone between fraud and legitimacy”.

It also found that whitelists and blacklists both tend to emphasise criteria that are easily verifiable such as those that relate to transparency, while criteria that are crucial but harder to assess - such as the quality of a journal’s peer review - are less well covered.

What about ERA?

In defending the article “The Jongs and The Galleys: Traditional Ships of the Past Malay Maritime Civilization”, UPM highlighted that it was published in a peer-reviewed journal listed in the “ERA database”, without clarifying what it was referring to.

This most likely refers to a list published under the Australian Research Council’s Excellence in Research for Australia initiative.

It is a list of journals being monitored to assess Australia’s research output, and according to UPM’s library website, has also been adopted by Malaysia for similar purposes under the Malaysia Research Assessment Instrument II.

The website states that UPM’s School of Graduate Studies requires its students who are publishing papers to do so with a journal listed in one of three databases - Elsevier’s Scopus, Clarivate’s Journal Citation Reports, or ERA.

However, ERA is not intended as a whitelist of journals considered to be of high quality.

The Australian government website regarding the initiative states: “It is not designed to be used outside of the ERA process, and it must not be used as a future guide to publishing or as a quality indicator for publishing outlets.”

In other words, Australian research that gets published in an ERA-listed journal will be counted towards the country’s research-related key performance indexes, but that doesn’t necessarily mean it is a good journal.

The Australian Research Council is currently overhauling its evaluation process but has not disclosed details.

The International Journal of Academic Research in Business and Social Sciences has removed the UPM article from its website, with its publisher telling Malaysiakini it is “on hold” pending an investigation. It denies being a predatory publisher despite appearing on some blacklists.

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.