Associate professor Mohd Azizuddin Mohd Sani of Universiti Utara Malaysia offered a critique of our latest Invoke Centre for Policy Initiatives (I-CPI) survey, as reported in Malaysiakini on July 26, 2017. We would like to address his concerns about our response rate and the representativeness of our surveys.

Azizuddin cited the fact that many whom we contacted did not answer our survey questions as a key reason why the survey finding is not reflective of the actual level of support for BN, Pakatan Harapan and PAS.

But, a low response rate is not necessarily inaccurate.

I-CPI would like to take this opportunity to share the nitty gritty of doing a poll so that the public understands why we decided to publish three important information that are not disclosed by any other polls conducted so far.

For every survey finding that is made available to the public, I-CPI publishes the number of voters actually contacted, the number of voters who answer and the number of voters who stay on the line to answer all the questions.

For the latest survey that was disputed by Azizuddin (photo), our proprietary computerised polling system called 2.5 million voters randomly, of which 160,761 voters actually picked up the call. Out of that, in the end, 17,107 voters completed the survey and their responses formed the data for the finding that was shared with the public.

For the latest survey that was disputed by Azizuddin (photo), our proprietary computerised polling system called 2.5 million voters randomly, of which 160,761 voters actually picked up the call. Out of that, in the end, 17,107 voters completed the survey and their responses formed the data for the finding that was shared with the public.

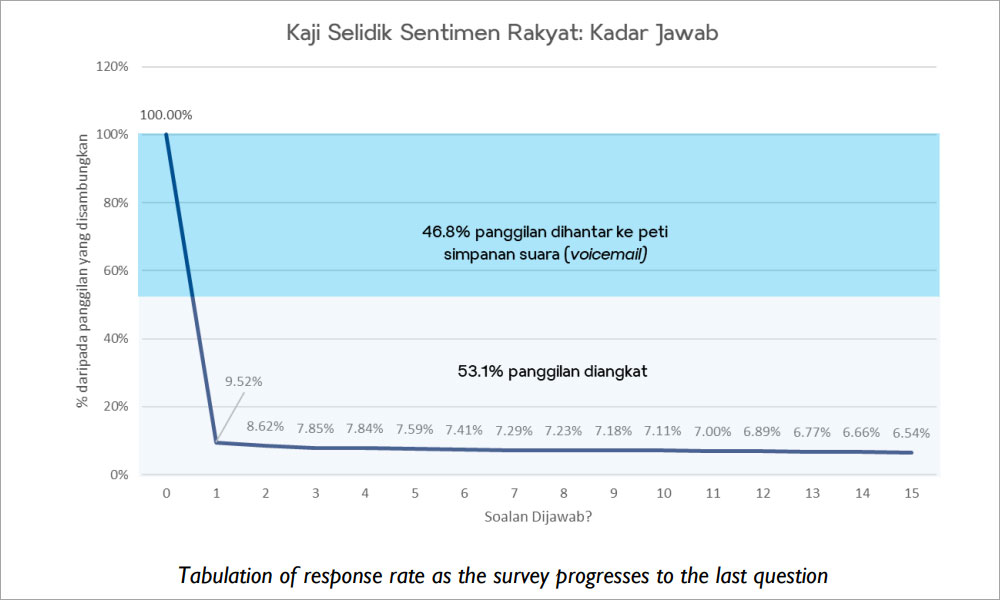

This gives a response rate (the number of people who pick up the phone, instead of the call going directly to voice mail or remains unanswered) of 6.54 percent and a completion rate (the number of those who pick up the call who went on to answer all the questions) of 10.64 percent.

The reason why we publish the population information (no of voters called, no of voters responded and no of voters who completed) is for the public to be able to benchmark our finding against internationally recognised surveys by comparing the response rate.

We hope other polls emulate this practice as well in the future in order to allow for comparability and reliability.

Therefore, for a poll that cites findings from 1,500 voters, the pollster would have to randomly call typically between 100,000 to 150,000 voters given the average response and completion rate (the completion rate is typically lower when the questions relate to voting preference).

If a poll claims to have managed to interview 1,500 voters who give complete responses from a set of 5,000 voters called, the extremely high completion and response rates would have flagged up some irregularities because a survey’s response rate can be benchmarked internationally.

We can confirm that our response rate for this survey is consistent with our previous surveys and with response rates around the world. For example, according to “Assessing the Representativeness of Public Opinion Surveys” by the Pew Research Centre, a nine percent response rate is typical for the United States.

In the United Kingdom, it is 6.66 percent as suggested by Martin Boon of ICM International to BBC News. For our latest survey, it was 6.54 percent and is within the band of response rates elsewhere in the world (if anything, one would expect a much lower response rate among Malaysian voters compared to the American and British voters). For more reading on this, please refer to Pew Research Centre.

The perceived and disputed low response rate, as brought up by Azizuddin, could have led to a few concerns about the accuracy and representativeness of the survey finding.

One typical concern that has been highlighted regularly by members of the public is the perception that a low response rate results in a small sample size. There has always been a confusion or misunderstanding on how big is a sample size that can accurately represent the national voting sentiment.

We often get the feedback that a survey finding from 17,000 voters is useless because the sample size constitutes only 0.05 percent of the Malaysian population and therefore is too negligible to be representative.

Our final sample size for the survey that Azizuddin referred to was 17,107 respondents. This gives us a margin of error arising from random sampling of +/- two percent. By any standard, a sample size of 17,107 respondents is actually very large and often is seen as unnecessary.

In theory, if the sample is chosen randomly with as little bias as possible, a sample size of 1,000 can be as representative as a sample size of 100,000. The polls in the USA and Europe typically have a sample size of between 1,000 to 2,000 respondents and that are internationally accepted.

The other concern that Azizuddin might have (given our overall response rate) is the lower response rate for specific demographic groups may be even lower, thus excluding specific demographic groups from the survey.

From six months of non-stop polling, we have been able to track the behaviour of particular demographic sub-groups (for example, non-bumiputera women above 50 years old from the Peninsular East Coast) when responding to a call from us.

Some groups are more likely to answer surveys, some less so. Our proprietary computerised polling system is designed to be able to decide automatically to call more or fewer numbers from each sub-group to compensate for different response rates.

This ensures that the breakdown of the responses at the end of the process is demographically representative of Malaysian voters.

Political attitudes of respondents biased?

However, Azizuddin’s main contention (quite apart from demographics - age, ethnicity and gender) was his belief that the political attitudes of our respondents were biased due to the questions that were heavily leaning against BN policies.

We posed statements about issues such as 1MDB and GST to respondents, asking if they agreed or disagreed. Azizuddin argued that since all the statements were starkly unfavourable to BN, it was more likely that many of BN supporters hung up before finishing the survey.

The reality is many of the people contacted hung up even before the first question. In fact, the “agree/disagree” statements about GST, 1MDB and other issues were not posed until the third question. The low response rate was primarily due to a very large percentage of our calls going to voicemail even before they even picked up the call.

This is normal and our response rate so far is also comparable to the response rate in telemarketing in Malaysia.

In fact, the reverse of the associate professor’s conclusion is probably true. Our experience over the past six months proves that, if anything, the respondents to our surveys are biased towards supporting BN.

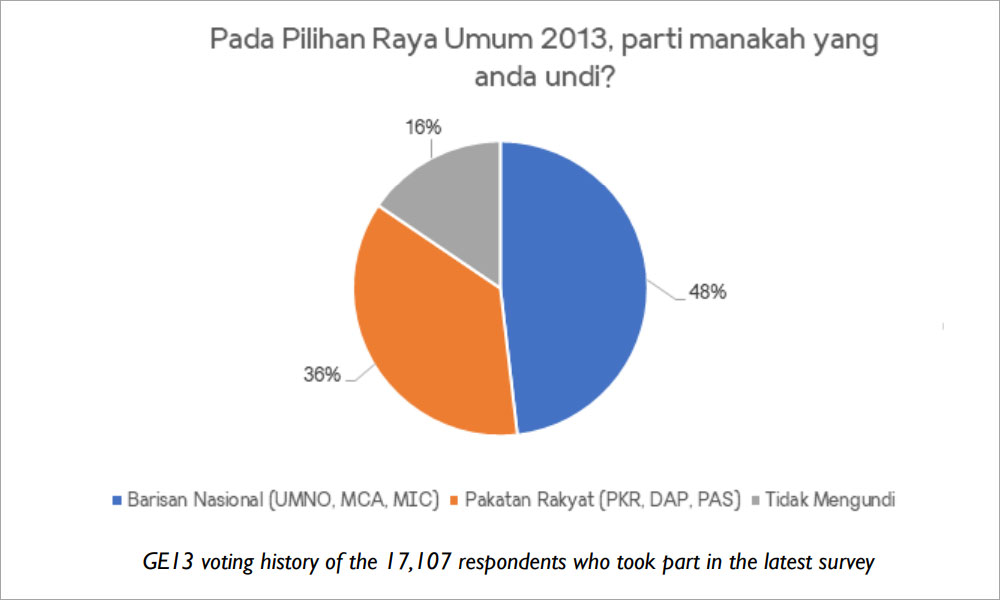

A good indication of this is our question in our latest survey asking how they voted in GE13. The actual popular national vote split was 43 percent:40 percent in favour of Pakatan Rakyat with 15 percent not voting.

In contrast, our survey respondent profile is clearly skewed in favour of BN.

Indeed, it was largely for this reason that we designed the statements to be more “Harapan-friendly”, in part, to compensate for this.

We hope these explanations address the associate professor's concerns (and any other parties who doubted our survey findings). As a matter of principle and in the spirit of responsible polling, we disclose our response rates to ensure the credibility of our results.

It goes without saying that Malaysians (many of whom would have hung up on us) would find a high response rate very surprising. We hope in the future, it will be helpful if other pollsters adopt the same transparency.

This will enhance the public’s trust in the poll numbers that are published from time to time.

Each of the 17,107 completed interviews is recorded and auditable. We invite any further queries or any party that wishes to audit the samples of the recorded interviews.

RAFIZI RAMLI is PKR vice-president, Pandan MP and Invoke coordinator, while SHAUN KUA is Invoke strategic director. - Mkini

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.